LLMs

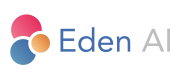

Eden AI provides two types of Large Language Model (LLM) nodes for building advanced workflows: LLM Chat and LLM Multimodal Chat. These nodes integrate the latest models from top providers, including Mistral, OpenAI, Anthropic, Google, Meta, Perplexity, Cohere, and more. Each node is designed for seamless configuration with both basic and advanced options, ensuring flexibility for your use case.

LLM Chat Node

The LLM Chat node is used for text-based conversational or generative tasks. It allows you to send a prompt and receive a text response, making it ideal for chatbots, text generation, summarization, or question-answering systems.

Required Parameters:

- Input: A text string containing the prompt or context to be processed by the model.

- Model: Choose from the best models on the market, optimized for performance, cost, and latency.

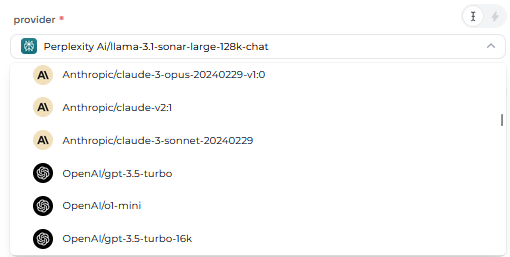

LLM Multimodal Chat Node

The LLM Multimodal Chat node extends functionality by allowing you to combine text inputs with other data types, such as images or audio. It’s ideal for tasks like contextual image analysis or multimodal conversational agents.

Required Parameters:

- Input: Text, combined with additional input modalities (e.g.,

image,audio). - Model: Select from top multimodal models based on your task requirements.

Advanced Configuration (Optional)

Both nodes support advanced configuration for more precise control over model behavior and workflow reliability.

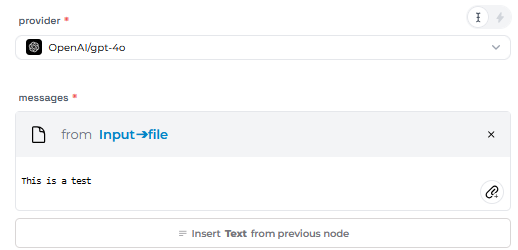

Fallback Provider

Set a fallback provider to ensure uninterrupted operation. If the primary model fails, the fallback model will automatically handle the request.

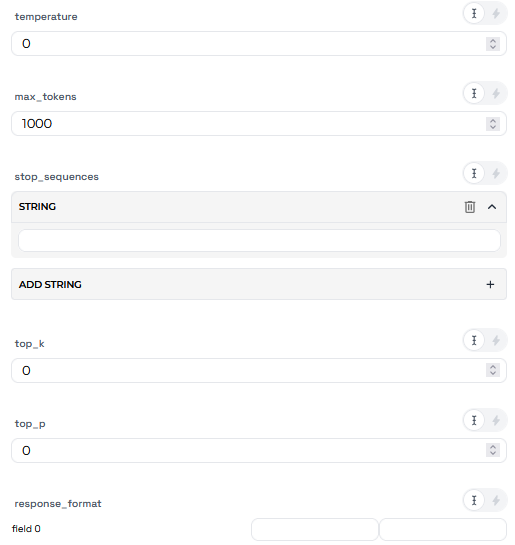

Customizable Parameters:

- Temperature: Adjusts randomness in the response. Higher values (e.g., 0.8) result in more creative outputs, while lower values (e.g., 0.2) produce more deterministic results.

- Top-p: Enables nucleus sampling by selecting tokens from the top cumulative probability.

- Top-k: Limits token selection to the top-k most probable tokens.

- Max Tokens: Specifies the maximum number of tokens in the response.

- Stop Sequences: Defines text patterns that signal the model to stop generating further tokens.

- System Prompt (Chat Global Action): Set a global instruction to guide the model’s behavior, e.g., "Act as a helpful assistant."

- Response Format: Specify the expected response structure, such as JSON or plain text.

Model Flexibility

Both LLM nodes are built to work with the latest models from top providers, offering:

- Dynamic model selection: Adapt workflows based on accuracy, cost, or latency needs.

- Scalability: Support for new model releases and multimodal capabilities.

With these configurations, the LLM nodes empower developers to integrate advanced conversational AI features seamlessly into their workflows.

Updated 4 months ago